News

A team of students in the computational science and engineering master’s program developed a tool that analyzes the interpretability and fairness of machine-learning models with Square.

The concept of fairness in financial lending is thorny, with banks frequently accused of using deceptive or abusive practices. Add machine-learning algorithms into the mix and things can get downright dicey.

How can a financial institution guarantee that its artificial intelligence is making fair determinations? And what if a rejected borrower asks for the reason she was turned down by the algorithm?

A team of students in the computational science and engineering master’s program offered by the Institute for Applied Computational Science (IACS) at the Harvard John A. Paulson School of Engineering and Applied Sciences dove into these knotty questions during a semester-long project with Square Capital.

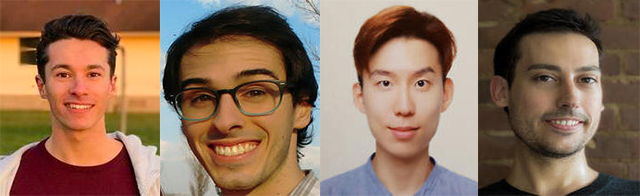

The team, comprised of Paul Blankley, S.M. ’18, Camilo Fosco, M.E. ’19, Ryan Janssen, S.M. ’18, and Donghun Lee, M.E. ’19, developed a tool that analyzes the interpretability and fairness of machine-learning models with Square.

“Square can achieve a lot higher accuracy using a machine-learning model than having humans look at everything,” Blankley said. “But without interpretability—without the ability to give a reason behind a decision—you wouldn’t be able to use these high-accuracy models in these types of sensitive situations.”

Students (from left) Paul Blankley, S.M. ’18, Camilo Fosco, M.E. ’19, Donghun Lee, M.E. ’19, and Ryan Janssen, S.M. ’18, developed a tool that analyzes the interpretability and fairness of machine-learning models.

A machine-learning model’s interpretability is centered on the features that were influential in its decision about a specific observation, Blankley said. The students evaluated interpretability using several different computational approaches. One technique, input gradients, involves studying the derivatives in the model, with respect to the data inputs, to ascertain the relative importance of each feature.

Without the ability to give a reason behind a decision—you wouldn’t be able to use these high-accuracy models in these types of sensitive situations.

Showing which features are most influential—the borrower’s credit score or loan amount, for instance—not only enables the lender to offer reasons for a loan decision, it could also show that a model is acting strangely. For example, if a completely unrelated feature in the data is heavily weighted—like marital status or zip code—it would be a clue that something is wrong with the model, Blankley said.

To tackle the issue of fairness, the students utilized statistical tests that compared the decisions the model made for the general population with the decisions it made for a certain protected class of individuals.

“One of the main problems is that all data we have today is inherently biased,” Fosco said. “There are some situations where these models are trained on a certain set of data points that show a bias towards a particular class, which is going to affect the results.”

For example, one of the tests, statistical parity, reveals the proportion of positive cases for a protected class versus the proportion for the general population. In a model that is fair, that proportion should be as close as possible.

The students combined the tools they developed into a dashboard that enables users to input a machine-learning model and a dataset, then receive a report grading the model’s fairness and listing the top features that were most significant in the algorithms’ determinations. The dashboard is model agnostic, so it could be used with any machine-learning algorithm and any set of data, Blankley said.

The biggest challenge the students faced in building the dashboard was defining the scope for the project and choosing the specific tools, reports, and graphs to include, he said. They carefully chose interpretability and fairness tests based on which ones would provide the most useful results for Square, Fosco added.

Distilling the complexity of a machine-learning algorithm into a simple dashboard that could be understood by users was also challenging, since a typical model uses more than 150 dimensions, far more than a human mind can comprehend, Janssen said. Collapsing all that information into a single-page report enables a user to actually understand what is happening inside the “black box” of a machine-learning model.

In addition to helping a company like Square determine fairness, the dashboard could also serve as an effective debugging tool for algorithms used in any number of different situations.

“Increasingly, these machine-learning models are becoming parts of our everyday lives. They are popping up in many businesses and industries, and we are only going to see more in the future,” Janssen said. “But for all their sophistication, with machine-learning models, it is often very difficult to understand why they make the decisions they make. Our system aims to improve that.”

Topics: AI / Machine Learning, Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Press Contact

Adam Zewe | 617-496-5878 | azewe@seas.harvard.edu