News

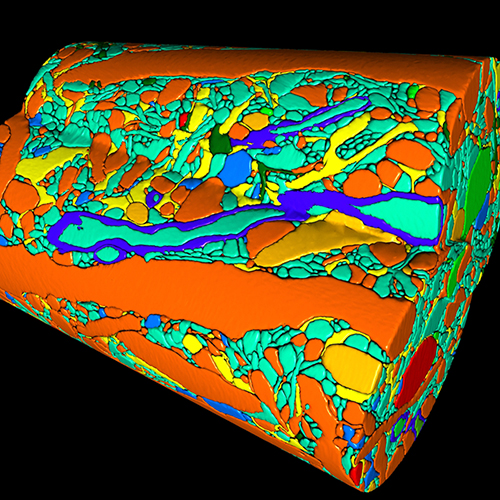

A reconstruction of cortical connectivity (Image courtesy of the Lichtman Lab/Harvard)

Harvard’s John A. Paulson School of Engineering and Applied Sciences (SEAS), Center for Brain Science (CBS), and the Department of Molecular and Cellular Biology have been awarded over $28 million to develop advanced machine learning algorithms by pushing the frontiers of neuroscience.

The Intelligence Advanced Research Projects Activity (IARPA) funds large-scale research programs that address the most difficult challenges facing the intelligence community. Today, intelligence agencies are inundated with data – more than they are able to analyze in a reasonable amount of time. Humans, naturally good at recognizing patterns, can’t keep pace. The pattern-recognition and learning abilities of machines, meanwhile, still pale in comparison to even the simplest mammalian brains. IARPA’s challenge: figure out why brains are so good at learning, and use that information to design computer systems that can interpret, analyze, and learn information as successfully as humans.

To tackle this challenge, Harvard researchers will record activity in the brain's visual cortex in unprecedented detail, map its connections at a scale never before attempted, and reverse engineer the data to inspire better computer algorithms for learning.

“This is a moonshot challenge, akin to the Human Genome Project in scope,” said project leader David Cox, assistant professor of molecular and cellular biology and computer science. “The scientific value of recording the activity of so many neurons and mapping their connections alone is enormous, but that is only the first half of the project. As we figure out the fundamental principles governing how the brain learns, it's not hard to imagine that we’ll eventually be able to design computer systems that can match, or even outperform, humans.”

These systems could be designed to do everything from detecting network invasions, to reading MRI images, to driving cars.

The research team tackling this challenge includes Jeff Lichtman, the Jeremy R. Knowles Professor of Molecular and Cellular Biology; Hanspeter Pfister, the An Wang Professor of Computer Science; Haim Sompolinsky, the William N. Skirball Professor of Neuroscience; and Ryan Adams, assistant professor of computer science; as well as collaborators from MIT, Notre Dame, New York University, University of Chicago, and Rockefeller University.

(Three-dimensional reconstruction of cortical connectivity from the Lichtman Lab)

The multi-stage effort begins in Cox’s lab, where rats will be trained to recognize various visual objects on a computer screen. As the animals are learning, Cox’s team will record the activity of visual neurons using next-generation laser microscopes built for this project with collaborators at Rockefeller University, to see how brain activity changes as the animals learn. Then, a substantial portion of the rat's brain – one-cubic millimeter in size – will be sent down the hall to Lichtman’s lab, where it will be diced into ultra-thin slices and imaged under the world’s first multi-beam scanning electron microscope, housed in the Center for Brain Science.

“This is an amazing opportunity to see all the intricate details of a full piece of cerebral cortex,” says Lichtman. “We are very excited to get started but have no illusions that this will be easy.”

This difficult process will generate over a petabyte of data -- equivalent to about 1.6 million CDs worth of information. This vast trove of data will then be sent to Pfister, whose algorithms will reconstruct cell boundaries, synapses, and connections, and visualize them in three dimensions.

“This project is not only pushing the boundaries of brain science, it is also pushing the boundaries of what is possible in computer science,” said Pfister. “We will reconstruct neural circuits at an unprecedented scale from petabytes of structural and functional data. This requires us to make new advances in data management, high-performance computing, computer vision, and network analysis.”

If the work stopped here, its scientific impact would already be enormous — but it doesn’t. Once researchers know how visual cortex neurons are connected to each other in three dimensions, the next question is to figure out how the brain uses those connections to quickly process information and infer patterns from new stimuli. Today, one of the biggest challenges in computer science is the amount of training data that deep learning systems require. For example, in order to learn to recognize a car, a computer system needs to see hundreds of thousands of cars. But humans and other mammals don’t need to see an object thousands of times to recognize it — they only need to see it a few times.

In subsequent phases of the project, researchers at Harvard and their collaborators will build computer algorithms for learning and pattern recognition that are inspired and constrained by the connectomics data. These biologically-inspired computer algorithms will outperform current computer systems in their ability to recognize patterns and make inferences from limited data inputs. For example, this research could improve the performance of computer vision systems that can help robots see and navigate through new environments.

"We have a huge task ahead of us in this project, but at the end of the day, this research will help us understand what is special about our brains," Cox said. "One of the most exciting things about this project is that we are working on one of the great remaining achievements for human knowledge -- understanding how the brain works at a fundamental level."

Sidebar

This year's Institute for Applied Computational Science (IACS) symposium on the Future of Computation in Science and Engineering will focus on the converging fields of neuroscience and computer science and machine learning. BRAIN + MACHINES will bring together machine learning experts, neuroscientists, and scholars from across several fields to explore questions like:

- How can humans and intelligent machines work together?

- Does computer science really need neuroscience or is there a better way to design artificial intelligence?

- What does the future hold for brains and machines?

Other topics will include how treatments for brain-related diseases, such as schizophrenia, are being developed thanks to advances in technology and computation.

For more information, visit IACS.

Topics: AI / Machine Learning

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Hanspeter Pfister

An Wang Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | lburrows@seas.harvard.edu